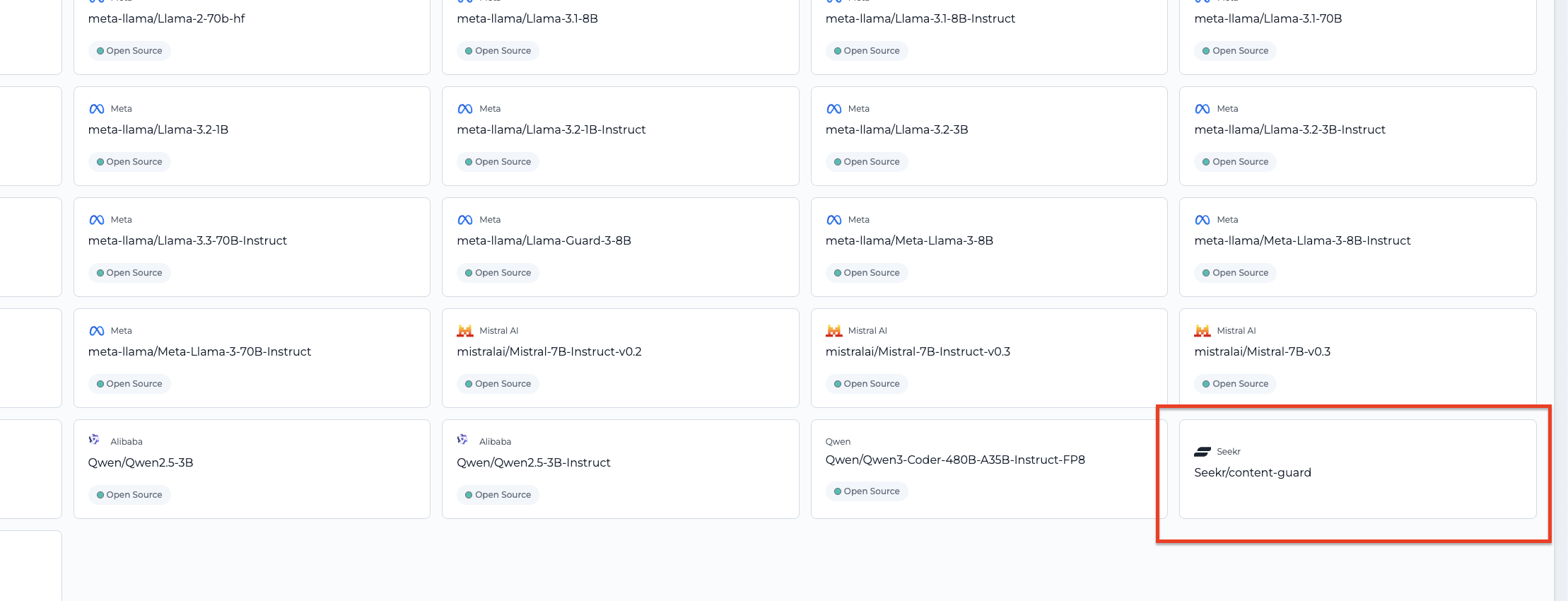

Seekr Content-Guard model

This page is about how to use the Seekr Content Guard model in SeekrFlow.

Full Model Guide (GARM + Civility)

Summary

Seekr Content-Guard is a classification model designed for podcast content moderation. It analyzes podcast episode transcripts to detect harmful, inappropriate, or offensive speech using industry-standard GARM risk categories and Seekr’s proprietary Civility scoring system. The model powers brand safety and suitability decisions by evaluating each episode’s content for advertiser risk and conversational tone.

-

GARM content category risks refer to standardized classifications of harmful or sensitive content based on the Global Alliance for Responsible Media (GARM) framework. These include categories like hate speech, adult content, violence, and misinformation. The model evaluates transcript segments to determine which categories are present and assigns a risk level from

NonetoFloor, indicating how suitable the content is for advertising. -

Seekr Civility Score™ measures how respectful or hostile a podcast conversation is. It detects personal attacks and severe language targeting protected traits (e.g., race, religion, gender identity). The score reflects the frequency and severity of these attacks and outputs a numerical a label:

severe_attack,non_severe_attack, orno_attackdepending on the type of attack.

Key Features

-

Podcast Transcript Classification

Specifically built to analyze and classify text from podcast episode transcripts. This ensures higher accuracy for spoken language patterns, informal tone, and multi-speaker dialogue commonly found in podcasts.→ Benefit: Users get accurate, purpose-tuned moderation for podcast content rather than relying on generic classifiers.

-

GARM Risk Detection (11 Categories)

The model identifies harmful or sensitive content across 13 standardized GARM categories (e.g. Hate Speech, Sexual Content, Violence). Each detection is labeled with a 5-level severity rating:None,Low,Medium,High, orFloor.→ Benefit: Enables users to flag, filter, or avoid monetizing episodes that contain content misaligned with brand safety standards.

-

Seekr Civility Score™

Evaluates tone by detecting personal and severe attacks in conversation. Each chunk receives a label:severe_attack,non_severe_attack, orno_attackdepending on if the model detected an attack or not.→ Benefit: Helps users understand how hostile, respectful, or inflammatory a conversation is — useful for advertisers, distributors, or platforms promoting positive content.

-

Chunk-Level Analysis

Optimized for short, focused transcript segments. The model evaluates each chunk independently, enabling granular scoring.→ Benefit: Allows users to pinpoint exactly where risky or hostile content appears in an episode — useful for redaction, highlighting, or fine-tuned moderation.

-

Supports manual Aggregation Across Episodes and Shows

Although the model returns scores per chunk, its structure supports downstream aggregation logic — making it possible to roll up chunk results into episode-level and show-level summaries.→ Benefit: Powers scalable analysis across full podcast catalogs, enabling content ranking, flagging, or visibility rules based on safety thresholds.

Target Input Type

The Seekr ContentGuard model is specifically designed and fine-tuned for podcast episode analysis. To ensure accurate classification and scoring, the model expects input in the form of short, speaker-specific text segments derived from audio recordings.

-

Use Only on Podcast Audio Content

The model is intended for spoken-word content from podcast episodes. It is not designed to evaluate written articles, blog posts, social media, or scripted video transcripts. Its accuracy depends on the natural patterns and informal structures of real podcast conversations. -

Transcripts Must Be Derived from Audio Files

Input must come from a transcript that was generated directly from podcast audio — typically pulled from.mp3or.mp4files listed in a podcast’s RSS feed. These files contain the full spoken episode, which must be converted to text prior to classification. -

Transcript Must Include Diarization and Timestamps

Each speaker’s dialogue must be separated (speaker diarization) and aligned with timestamps. This is essential because the model works best on short, speaker-specific chunks. Without diarization, the content may be misattributed or scored inaccurately. -

Chunked Input Format Required

The model does not support full-episode input. Transcripts must be split into manageable chunks (e.g., 20–60 seconds of speech) to ensure accurate scoring and context resolution.

→ To get the most value from Seekr ContentGuard, input should be cleanly diarized, short-form text derived from actual podcast conversations.

GARM + Civility Explained

Seekr ContentGuard produces two independent but complementary outputs for each segment of podcast content:

- GARM Risk Detection, based on content categories defined by global advertising standards

- Civility Scoring, based on the tone and behavioral quality of speaker dialogue

Each system is described in detail below:

GARM Risk Detection

GARM (Global Alliance for Responsible Media) provides a standardized framework used across the advertising industry to define what content may be considered unsafe or unsuitable for brand monetization.

Seekr ContentGuard evaluates each transcript chunk for signs of content that falls under one or more of GARM’s 11 core categories. If present, it assigns a risk level to each detected category.

GARM Categories Include:

- Adult & Explicit Sexual Content

- Arms & Ammunition

- Crime & Harmful acts to individuals and Society, Human Right Violations

- Death, Injury or Military Conflict

- Debated Sensitive Social Issue

- Hate speech & acts of aggression

- Illegal Drugs/Tobacco/e-cigarettes/Vaping/Alcohol

- Obscenity and Profanity, including language, gestures, and explicitly gory, graphic or repulsive content intended to shock and disgust

- Online piracy

- Spam or Harmful Content

- Terrorism

Each of these categories reflects a class of content that may violate platform policies or pose brand suitability concerns. Categories are defined using official GARM guidance, with some adaptation for spoken-word podcast content.

GARM Risk Levels

Every detected category receives one of the following severity levels:

None(not present)Low– educational or scientific discussionMedium– detailed reporting or dramatizationHigh– insensitive, graphic, or glamorized portrayalFloor– explicitly harmful content; not suitable for any ads

Use Case:

GARM scoring enables advertisers, platforms, or moderators to:

- Flag or restrict monetization of episodes

- Identify high-risk topics

- Assess safety for brand placement at the segment level

Seekr Civility Score™

Civility Score™ is Seekr’s proprietary system for measuring how respectful or hostile podcast conversations are. It operates independently from GARM and focuses not on what is being discussed, but how it is being said.

The model classifies tone by detecting attacks within each chunk — either general or severe — and then uses the frequency of these attacks per hour to determine a Civility Score.

Two Types of Attacks:

-

General Attacks

- Insults or demeaning language targeting someone’s intelligence, character, appearance, etc.

- Includes name-calling, mocking, threats, or repeated negative characterizations

-

Severe Attacks

-

Directed at someone based on legally protected characteristics such as:

- Race, religion, gender identity, sexual orientation

- Disability, age (40+), national origin, pregnancy, veteran status

-

Often involve slurs or hate speech

-

General attacks degrade tone. Severe attacks immediately signal unacceptable hostility and influence Civility Scores.

Civility Output Format

For each chunk, SeekrContentGuard will return:

-

attack_type

Returns one of:"severe_attack"– attack targets a protected characteristic (e.g., race, gender, religion, disability)"non_severe_attack"– insult, mockery, threat, or derogatory language that does not target a protected class"no_attack"– no attack detected in the chunk

The model only labels attacks for a chunk. It does not calculate per-episode Civility Scores. You must do that aggregation yourself.

Scoring Logic:

The Civility Score is based on:

Civility Score = (Total Attacks ÷ Total Episode Duration in Hours)Breakdown:

-

Total Attacks Detected

Sum of all attacks from every chunk in the episode. You may optionally:- Count

severeattacks as more than 1 (e.g.,×2or×3) - Exclude chunks with

"none"from calculations

- Count

-

Episode Duration in Hours

- Either:

minutes ÷ 60orhours + (minutes ÷ 60) - Use precise decimal format for consistency

- Either:

Output Aggregation

You are responsible for:

- Counting attacks across all chunks in an episode

- Applying your own scoring tiers or buckets (e.g., High, Medium, Low, No Civility)

You can also calculate Civility at the show level by averaging across multiple episode scores.

Suggested Civility Buckets (Optional)

You can define your own thresholds. SeekrAlign used a proprietary system, but you are allowed to create your own, for example:

Score (attacks/hr) | Civility Label |

|---|---|

0–0.5 | High Civility |

0.51–2.0 | Medium Civility |

2.01–4.0 | Low Civility |

| No Civility |

These values are illustrative only. You may tune your thresholds based on your content standards.

Example Calculations

Example 1: Clean Long Episode

- Episode: Joe Rogan Experience w/ Tim Dillon

- Duration:

3 hours - Attacks:

6 general attacks - Score:

6 ÷ 3 = 2 attacks/hour - Bucket: Medium Civility

Example 2: Medium-Length, Spikier Episode

- Duration:

1 hour 45 minutes=1.75 hours - Attacks:

6 total - Score:

6 ÷ 1.75 ≈ 3.43 attacks/hour - Bucket: Low Civility

Example 3: Short Episode With One Severe Attack

- Duration:

45 minutes=0.75 hours - Attacks:

1 severe attack - Even though

1 ÷ 0.75 = 1.33, presence of severe attack may force score to No Civility

Implementation Tips

- Use attack counts per hour for comparability across episodes

- Ensure diarization is accurate — broken speaker turns can cause over-chunking and inflate attack counts

- For stricter brands, you may set a 0-tolerance rule for any severe attacks

This approach gives you full control over Civility labeling logic while relying on SeekrContentGuard to flag the presence and type of attacks at a granular level.

Use Case:

Civility scoring enables users to:

- Evaluate the tone of a podcast beyond topic-based classification

- Identify respectful vs. hostile episodes

- Enable tone-sensitive advertising, platform moderation, or content surfacing logic

Relationship Between GARM and Civility

Although both systems score podcast content for risk or offensiveness, they serve different purposes:

| Dimension | GARM | Civility |

|---|---|---|

| Focus | What is being said | How it is being said |

| Output | Category + Risk Level | Attack Type + Civility Score + Label |

| Use Case | Brand safety / content policy | Tone detection / behavioral quality |

| Interdependence | Scored independently | Not influenced by GARM detection |

Together, GARM and Civility allow for comprehensive podcast content analysis — combining topic-based risk with speaker tone and conduct.

What The Seekr Model Provides vs What You Can Build Separately

Seekr ContentGuard is a model-only offering. SeekrFlow provides everything needed to deploy and interact with the model, but you are responsible for all surrounding infrastructure — including ingestion, transcript prep, and result aggregation.

SeekrFlow Provides:

These are the components that come with the platform and are fully managed by Seekr:

-

seekr/content-guardHosted Model

A pre-trained, production-ready model available in the SeekrFlow model library for secure deployment via SaaS. -

Model Deployment via UI or SDK

Deploy and manage the model through the SeekrFlow SaaS interface or programmatically using the Seekr SDK. -

GARM + Civility Scoring per Chunk

Submit a single chunk of text (speaker-level, diarized) to the model and receive structured predictions for:- GARM risk categories and severity levels

- Civility attack labels (General/Severe)

-

SDK Access for Programmatic Calls

The Seekr SDK can be used to batch, submit, and manage inference jobs with structured output returned per chunk.

You Are Able To Build Separately:

To fully integrate and operationalize Seekr ContentGuard (e.g. to recreate something like SeekrAlign), you are responsible for building the following pipeline components:

-

RSS Feed Parsing

Ingest and parse podcast RSS feeds to retrieve episode metadata and.mp3or.mp4URLs. -

Audio Extraction from Episodes

Download episode audio files and prepare them for transcription (extract clean audio format if needed). -

Transcription with Diarization

Use a model like Whisper or other transcription systems to convert audio into diarized text (speaker-separated with timestamps). This is critical for chunking and contextual accuracy. -

Transcript Chunking

Split the transcript into short, speaker-specific chunks (usually 20–60 seconds) to fit the model’s input expectations. Ensure text is clean, attributed, and JSON-ready. -

Model Inference Pipeline

Submit each chunk individually to the deployed Seekr ContentGuard model via API/SDK. Capture and store each chunk’s prediction (GARM and Civility) as structured output. -

Aggregation Logic (Optional)

Implement your own rules for calculating episode-level and show-level scores by combining results across chunks:- For GARM: Aggregate risk levels per category across the episode

- For Civility: Calculate average score per hour or apply thresholds

-

Storage, Retrieval, and Reporting (Optional)

Store the scored results in a database for future lookup or reporting. You may also build:- A frontend dashboard or viewer

- A reporting system for brand safety insights

- An API layer to serve scored metadata to other apps or users

| Capability | Provided by Seekr | Built by You |

|---|---|---|

| Hosted Model | Yes | |

| GARM + Civility Scoring per Chunk | Yes | |

| API / SDK Access | Yes | |

| Episode Audio Ingestion | Yes | |

| Transcript Generation (w/ diarization) | Yes | |

| Chunking and Preprocessing | Yes | |

| Batch Model Inference | Yes | |

| Score Aggregation (Episode/Show) | Yes | |

| Output Storage and UI | Yes |

This separation ensures Seekr can provide a flexible scoring engine — while you retain full control over how you extract, process, store, and display results within your own product or moderation workflow.

Pipeline Architecture

The following outlines the full end-to-end pipeline required to operationalize the Seekr ContentGuard model for podcast scoring. While SeekrFlow provides the hosted model and API for scoring individual transcript chunks, everything else in the pipeline must be built and maintained by your team.

1. Pull .mp3 or .mp4 File from Podcast RSS Feed

.mp3 or .mp4 File from Podcast RSS Feed- Parse RSS feeds to locate the audio file URLs for each podcast episode.

- Most podcast feeds include a direct link to the

.mp3(or sometimes.mp4) file containing the episode audio. - These files should be downloaded or streamed securely in preparation for transcription.

2. Transcribe Audio to Text with Whisper (Diarization Required)

-

Use Whisper or an equivalent ASR (Automatic Speech Recognition) tool to convert the raw audio into a transcript.

-

Ensure the model supports speaker diarization (distinguishing who said what) and timestamps (to align text back to audio).

-

Output format should include:

- Accurate speaker labels (e.g., Speaker 1, Speaker 2)

- Timestamps for each utterance

- Confidence scores (optional but helpful)

3. Chunk Transcript into ~30–60 Second Speaker Segments

-

Segment the transcript into small, speaker-level chunks of approximately 30 to 60 seconds each.

-

Chunking is required for accurate model inference, as the model is optimized for short conversational windows.

-

Maintain metadata per chunk:

- Speaker ID

- Start and end timestamps

- Episode title and show name

- Raw transcript text

4. Send Each Chunk to Seekr/content-guard

Seekr/content-guard-

Use the SeekrFlow API or SDK to submit each chunk for evaluation.

-

Each chunk will return:

- GARM content category labels and severity scores

- Civility classification (attack type, if any) and Civility Score

-

Parallelize this step for batch processing performance.

5. Store Results

-

Store the raw classification output along with associated chunk metadata.

-

Consider using a document store or SQL database that allows fast retrieval and aggregation by:

- Episode

- Show

- Timecode

- Speaker

6. Aggregate into Episode and Show-Level Scores

-

Episode aggregation:

- Compute the total number of attacks and risk instances per episode.

- Derive Civility Score (attacks/hour)

-

Show aggregation:

-

Aggregate across all episodes to compute:

- Average Civility Score

- Most frequently occurring GARM risks

-

7. (Optional) Display via Internal Dashboard or Serve via API

- Build a frontend dashboard or reporting UI for internal teams to explore risk summaries, Civility trends, or episode-level classification details.

- Optionally, expose the scores and metadata via an internal API for downstream usage in ad decisioning systems, content flagging tools, or brand safety dashboards.

Step-by-Step Guide: Using Seekr/content-guard for Podcast Scoring

Seekr/content-guard for Podcast ScoringThis guide walks you through how to build and operate a full pipeline using SeekrFlow's Seekr/content-guard model to score podcast episode transcripts for GARM risk and Seekr Civility™. You’ll deploy the model, transcribe audio content, chunk the transcript, send each chunk to the model, store the results, and roll up the outputs into structured episode- and show-level scores.

Step 1: Deploy the Model

- Go to the SeekrFlow Model Library

- Deploy the

Seekr/content-guardmodel - Copy the Model ID to use in API or SDK inference calls

- No fine-tuning or special config required — it's ready to use as-is

Step 2: Acquire and Parse the Podcast RSS Feed

-

Find a public RSS feed for your target podcast:

- Use PodcastIndex.org, ListenNotes API, or check the podcast's website

- Example feed:

https://feeds.megaphone.fm/GLT1412515089(Joe Rogan Experience)

-

Parse the XML feed to extract:

.mp3or.mp4download URL for each episode (look for<enclosure url="...">)- Metadata such as episode title, date, description, host, etc.

Step 3: Transcribe the Audio with Diarization

-

Use Whisper or a similar STT (speech-to-text) model to transcribe the audio

-

Required: speaker diarization and timestamps per utterance

-

Tools that support this:

- OpenAI Whisper + pyannote.audio

- AssemblyAI (commercial)

- Deepgram

- Google Cloud Speech-to-Text

- AWS Transcribe

-

-

Output should look like:

- Speaker-labeled utterances

- Start and end times per line

- Clean, readable text (punctuated)

Step 4: Chunk the Transcript

-

Segment the transcript into short, speaker-anchored chunks (~30–60 seconds each)

-

Recommended chunking strategies:

-

By speaker turn: Group utterances by the same speaker until time cap is reached

-

By timestamp: If speaker diarization is noisy, split every 45–60 seconds

-

Ensure each chunk:

-

Has logical completeness (don’t cut mid-sentence)

-

Includes metadata:

- Speaker ID

- Start and end timestamp

- Episode ID (required for aggregation later)

-

-

Step 5: Run Scoring Inference on Chunks

- Send each chunk of text to the deployed model using the following format:

{

"text": "chunk text here",

"llm": "seekr-content-guard"

}- The model returns GARM and Civility results:

GARM Output Example:

[

{ "category": "profanity", "risk_level": "medium" },

{ "category": "hate speech", "risk_level": "high" }

]Civility Output (only if an attack is detected):

{

"attack_type": "severe",

}- Store each chunk’s scores in a structured database or file for post-processing

Step 6: Aggregate Chunk-Level Results

Episode-Level Aggregation

-

GARM Scoring:

-

Group all chunk predictions by episode

-

For each GARM category:

- Count how many times it appears

- Use risk severity weighting: Floor > High > Medium > Low > None

-

-

Civility Scoring:

- Calculate attacks per hour by adding up all the attacks and dividing by duration of the episode

Show-Level Aggregation

- Average across multiple episode scores per show

- Useful for advertisers, media analysts, or long-term moderation systems

Step 7: (Optional) Display Results or Serve via API

-

Store aggregated outputs in your database or index

-

Create internal dashboards or reports to visualize:

- Risky episodes

- Show-wide GARM heatmaps

- Civility trends over time

-

Or build an API for external clients to query episode/show scores

Output Examples

Per-Chunk GARM Output:

{ "category": "terrorism", "risk_level": "floor" }Per-Chunk Civility Output:

{ "attack_type": "general" }FAQ

Q: Can I use this model on articles or blog posts?

No. This model is intended only for spoken podcast content from .mp3 or .mp4 sources.

Q: Can I skip chunking and send the whole episode?

No. The model is built for short, focused chunks. Full episodes will not work reliably.

Q: Can I use this without Whisper?

Technically, yes — but you need diarized speaker-separated transcripts. Whisper is strongly recommended.

Q: Are GARM and Civility scores combined?

No. They are separate outputs. You must aggregate them independently.

Q: What counts as a “severe” attack?

Slurs or insults based on protected traits (e.g., race, religion, gender identity). See: US EEOC definitions

Updated 4 months ago