Meta Llama-Guard model

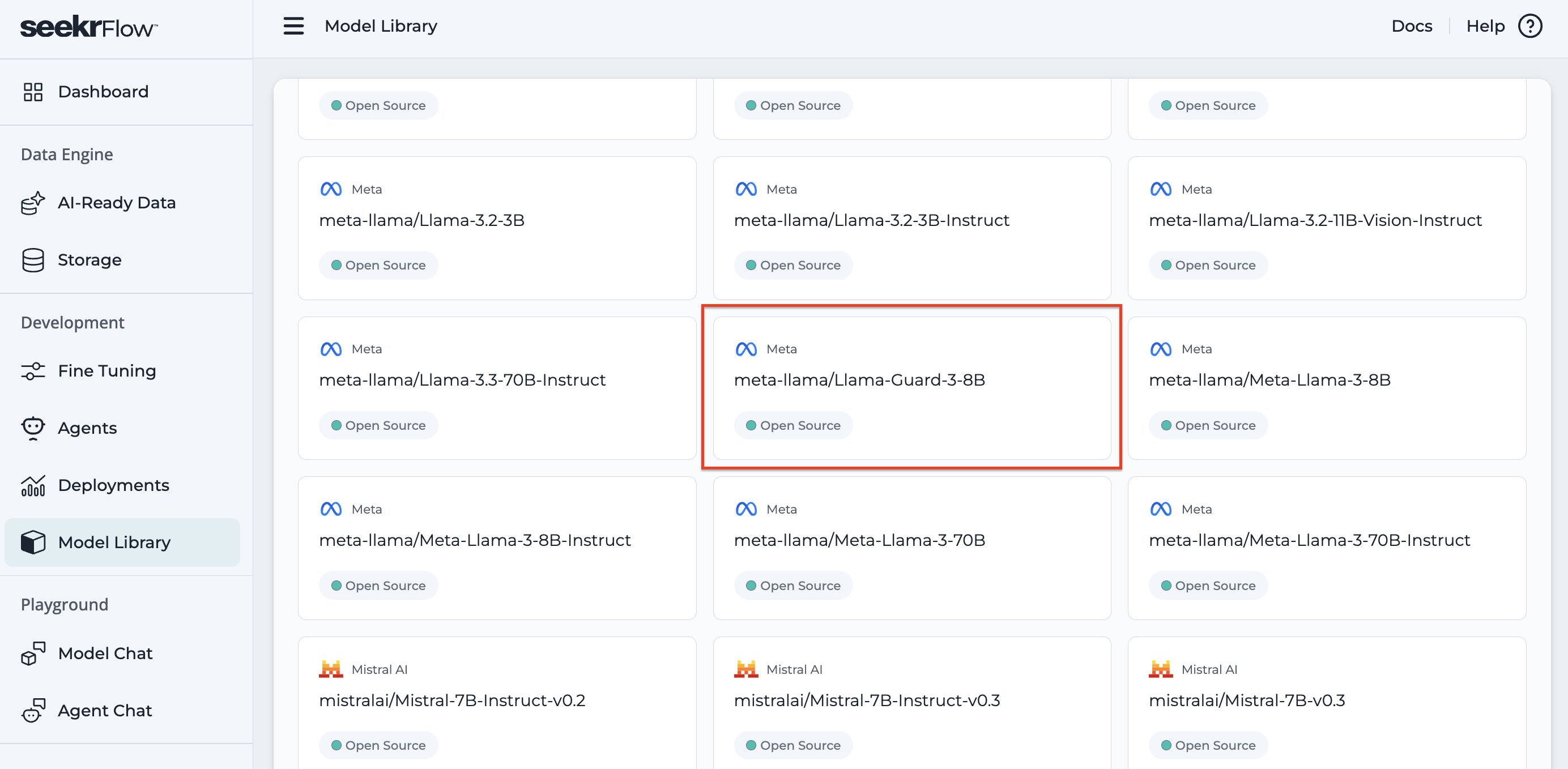

This page is about how to use the Llama Guard model in SeekrFlow.

Full Model Guide: meta-llama/Llama-Guard-3-8B

meta-llama/Llama-Guard-3-8BSummary

Llama Guard 3 (8B) is an open-source large language model from Meta designed for content moderation and safety classification. It evaluates user-generated text across categories such as hate speech, harassment, violence, and misinformation. Now available in the SeekrFlow model library, this model can be deployed to flag unsafe content in chat, social platforms, RAG pipelines, and agent interactions.

-

Moderation Type: Text-based classification

-

Taxonomy: MLCommons Responsible AI (22-category hazard set)

-

Usage Context: Real-time inference or post-hoc content safety auditing

Helpful Links:

Key Features

- 22 Risk Categories

Based on MLCommons Responsible AI taxonomy (e.g., hate, violence, self-harm, sexual content, etc.) - Supports 1st or 2nd Person Framing

Detects unsafe content from either user or assistant messages - Multi-language Capable

While optimized for English, can moderately generalize to Spanish, French, German, etc. - Simple Prompt Format

Uses a clean, JSON-like input for user and assistant messages - Open-source, Lightweight Model

Uses the 8B Llama 3 base model for relatively fast, deployable inference

Target Input Type

- Text-based user or assistant messages (chat-based systems, generative agents, Q&A apps)

- Supports both single message and multi-turn conversation review

- Not built for audio or video moderation — text only

Taxonomy Categories (MLCommons Hazard Set)

The model classifies text using the MLCommons Responsible AI hazard taxonomy, including:

-

Hate

-

Harassment

-

Sexual content

-

Self-harm

-

Violence

-

Criminal planning

-

Weapons

-

Drugs

-

Alcohol

-

Misinformation

-

Health misinfo

-

Legal misinfo

-

Spam

-

Profanity

-

Insults

-

Graphic content

-

NSFW

-

Solicitation

-

Extremism

-

Privacy violation

-

Malicious code

-

Financial harm

Each category returns a "safe" or "unsafe" label.

Languages Supported

- Primary: English

- Partial Generalization: Spanish, French, German, Portuguese, Italian, Dutch, etc.

- Model has not been fine-tuned for multi-language safety, so results may vary across languages.

How to Use in SeekrFlow (Step-by-Step)

- Deploy the Model

- Go to SeekrFlow Model Library

- Deploy

meta-llama/Llama-Guard-3-8B - Copy the model ID

- Format Your Input

- Use the structured message format:

{

"messages": [

{"role": "user", "content": "Hey, you suck and I hate you."}

]

}- Run Inference via SDK or API

- Pass the

messagesarray with your deployed model ID - Results will return one or more

unsafecategory flags

- Handle Unsafe Output

- Optional: Block, redact, route to human review, or rephrase based on categories flagged

Model Prompt Format & Best Practices

Input Format

{

"messages": [

{ "role": "user", "content": "..." },

{ "role": "assistant", "content": "..." }

]

}- Can include just user input or both user + assistant responses

- Order matters; system assumes a back-and-forth conversation

Output Format

{

"unsafe": true,

"categories": {

"hate": true,

"violence": false,

"harassment": true,

...

}

}Best Practices

- Keep messages short (single utterance or <300 tokens)

- Structure your input for clarity (don't mix system prompts with user messages)

- Use consistent formatting if auditing multi-turn chats

What SeekrFlow Provides

- Fully hosted version of

Llama-Guard-3-8B - Fast, scalable API and SDK integration

- Outputs content moderation flags per message

- Access control, observability, and logging

What You Can Build Separately

- Pipeline to generate or retrieve user messages

- Model inference logic (loop through messages, score each)

- Optional: dashboard, moderation UI, policy logic, score aggregation, redaction tools

Summary Table

| Feature | Provided by SeekrFlow | Built by You |

|---|---|---|

| Hosted model deployment | ✅ | |

| SDK & API access | ✅ | |

| Moderation scores (per message) | ✅ | |

| Input structuring | ✅ | |

| UI or dashboard | ✅ | |

| Logging and analytics | ✅ (optional) | |

| Moderation actions | ✅ (block, flag, etc.) |

Limitations

- No confidence scores — only binary (safe/unsafe) output

- English-centric — lower accuracy in non-English text

- Context window is limited to ~8k tokens (short convos recommended)

- Not fine-tuned for extremely short, isolated phrases

- No audio, image, or video input support

Use Cases

- Chat moderation (real-time or post-hoc)

- Filtering unsafe input/output from LLMs

- RAG system safety layer before retrieval/inference

- Agent communication review or agent guardrails

- Comment section moderation for news or community platforms

FAQs

Q: Can I use Llama Guard 3 for non-English content?

A: It may work on major Western languages, but it's optimized for English. Accuracy may drop otherwise.

Q: Can I use this on a full conversation or only individual messages?

A: You can submit a full message history in messages[], but it's best to keep it short for clarity and performance.

Q: Does it return a confidence score?

A: No, only binary true/false flags per category.

Q: Can I fine-tune or add my own taxonomy?

A: Not currently via SeekrFlow. You could host a modified version on your own infra if needed.

Q: How does this compare to Seekr ContentGuard?

A: Llama Guard is general-purpose and supports wide content types. Seekr ContentGuard is specifically tuned for podcast episodes and uses different scoring systems (GARM + Civility).

Updated 4 months ago