Release Notes | November 2024

November’s release introduces new features designed to give users greater control and efficiency over their AI workflows—from optimizing model outputs to managing large-scale deployments. For more details, read our full release blog.

New Features

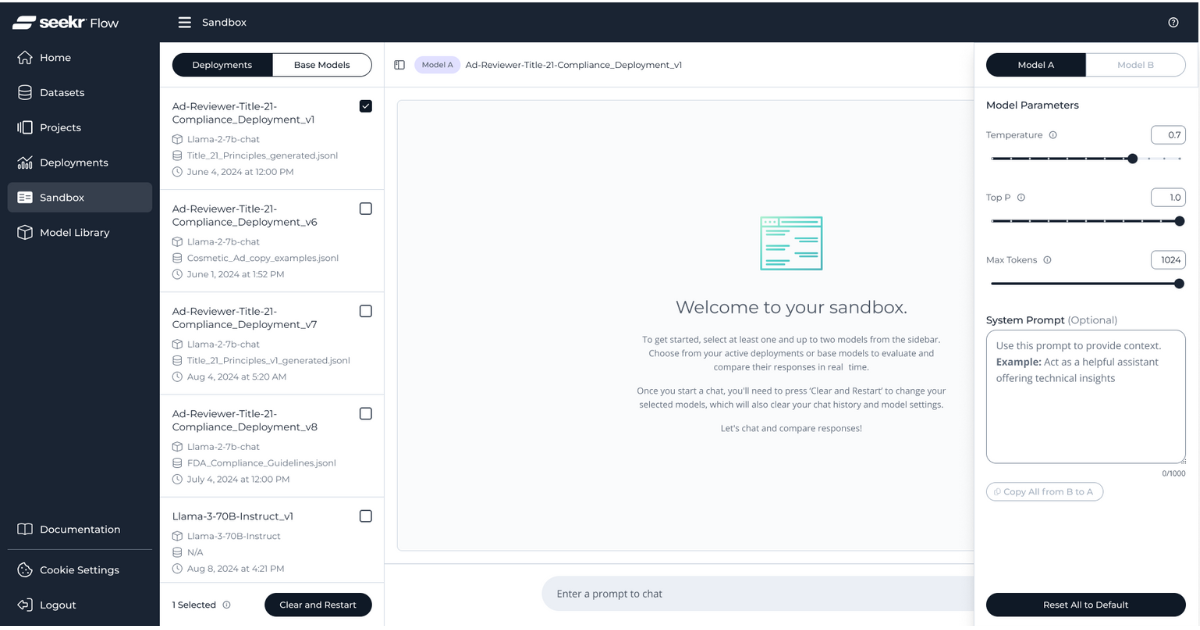

Sandbox Input Parameters

We have added new input parameters to the Sandbox environment: Temperature, Top P, and Max Tokens. These options give more control over inference outputs, allowing users tailor responses for specific tasks and use cases.

Enhanced Inference Engine for Faster Inference

With the integration of vLLM, inference speeds have dramatically improved, making your AI workflows faster and more efficient. This integration ensures that even complex models deliver faster results, helping users achieve more in less time.

We conducted performance testing to compare TGI and vLLM on Intel Gaudi2 accelerators.

- TGI: tgi-gaudi v2.0.4

- vLLM: vllm-fork v0.5.3.post1-Gaudi-1.17.0

On average, the enhanced inference engine performed 32% faster when compared to TGI for the RAG experiments (full interaction traces, 10 concurrent requests) using Meta-Llama-3.1-8B-Instruct

Significant latency improvements in load testing with 100 concurrent users:

- Meta-Llama-3-8B-Instruct: ~45% faster

- Meta-Llama-3.1-8B-Instruct: ~39% faster

Enhanced OpenAI compatibility features

Our inference engine now seamlessly integrates with OpenAI’s ecosystem, expanding workflow capabilities and enhancing usability.

- Log Probabilities: New support for log_probs and top_logprobs, providing insights into model decision-making, aiding debugging, and improving output accuracy.

- Dynamic Tool Calling: Custom functions can now be automatically invoked by the model based on context, streamlining business logic integration.

Try it yourself!

The example code below shows you how to leverage the OpenAI client and SeekrFlow's inference engine to create a custom unit conversion tool that can be configured dynamically.

Create the client and make an API request

import os

import openai

# Set the API key

os.environ["OPENAI_API_KEY"] = "Paste your API key here"

# Create the OpenAI client and retrieve the API key.

client = openai.OpenAI(

base_url="https://flow.seekr.com/v1/inference",

api_key=os.environ.get("OPENAI_API_KEY"

)

# Send a request to the OpenAI API to leverage the specified Llama model as a unit conversion tool.

response = client.chat.completions.create(

model="meta-llama/Llama-3.1-8B-Instruct",

stream=False,

messages=[{

"role": "user",

"content": "Convert from 5 kilometers to miles"

}],

max_tokens=100,

tools=[{

"type": "function",

"function": {

"name": "convert_units",

"description": "Convert between different units of measurement",

"parameters": {

"type": "object",

"properties": {

"value": {"type": "number"},

"from_unit": {"type": "string"},

"to_unit": {"type": "string"}

},

"required": ["value", "from_unit", "to_unit"]

}

}

}]

) Register a function from JSON

Next, define and register a Python function from JSON data.

# Parse json and register

def register_from_json(json_obj):

code = f"def {json_obj['name']}({', '.join(json_obj['args'])}):\n{json_obj['docstring']}\n{json_obj['code']}"

print(code)

namespace = {}

exec(code, namespace)

return namespace[json_obj["name"]]Run the unit conversion tool

This function executes the tool call, given an LLM response object.

# Execute our tool

def execute_tool_call(resp):

tool_call = resp.choices[0].message.tool_calls[0]

func_name = tool_call.function.name

args = tool_call.function.arguments

func = globals().get(func_name)

if not func:

raise ValueError(f"Function {func_name} not found")

if isinstance(args, str):

import json

args = json.loads(args)

return func(**args)

execute_tool_call(response)Sample output

This is the output expected in response to the request made earlier to convert 5 kilometers to miles.

3.106855Federated Login with Intel

Intel® Tiber™ AI Cloud users can now access SeekrFlow™ with a new federated login feature

First-time users: Start by using your Intel® Tiber™ AI Cloud credentials, which will auto-populate the sign-up form for quick and easy access to SeekrFlow.

Returning users: Simply log in with your Intel® Tiber™ AI Cloud credentials for direct access to SeekrFlow.

This integration simplifies user management and access for those connected to Intel® Tiber™ AI Cloud.

Improvements & Bug Fixes

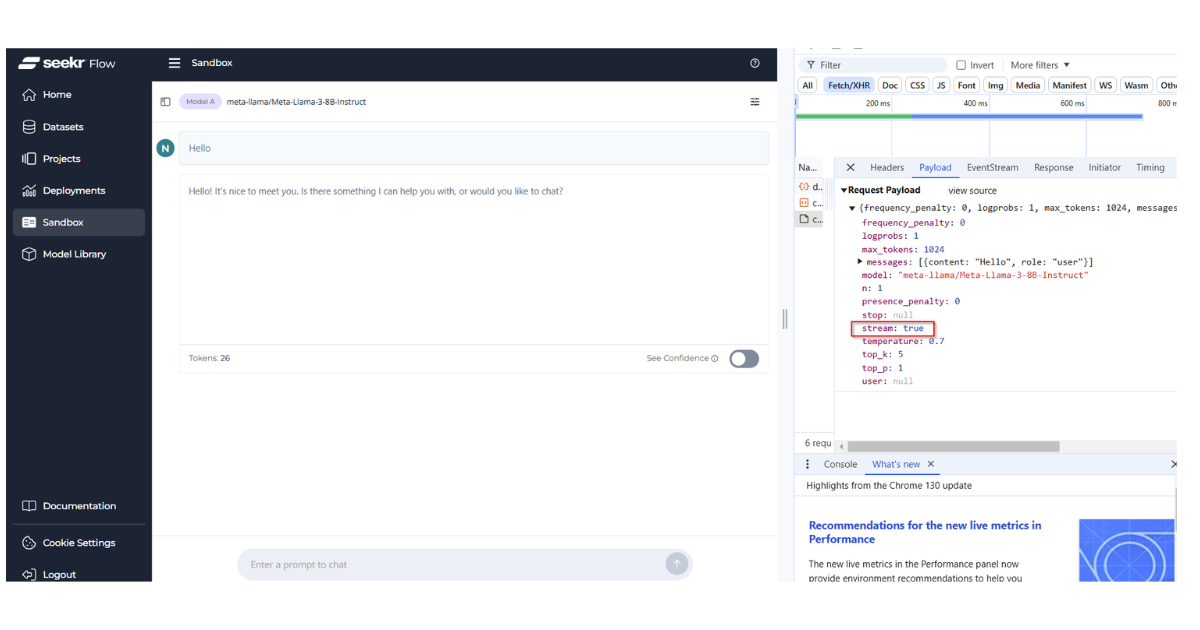

Streaming in Sandbox

We have enabled streaming for chat responses in the Sandbox, delivering results incrementally so users can utilize results without a delay.

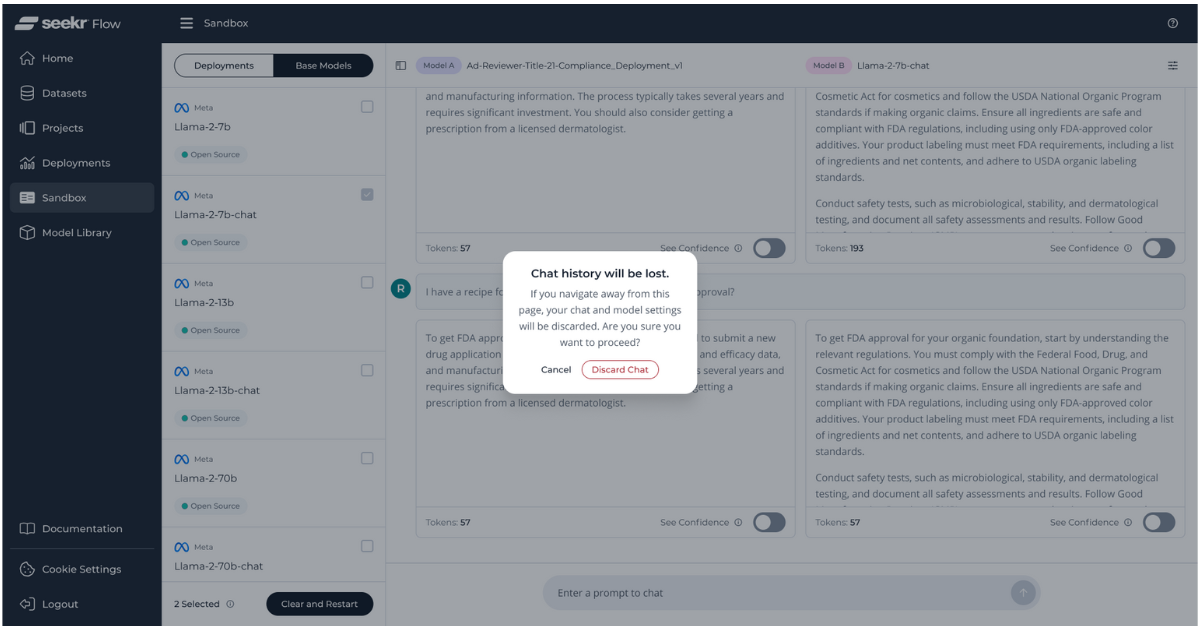

Clear and Restart Button Fix

We have resolved an issue where the “Clear and Restart” button in Sandbox didn’t function. A dialog box now appears, confirming that chat history will be cleared, allowing you to start fresh.

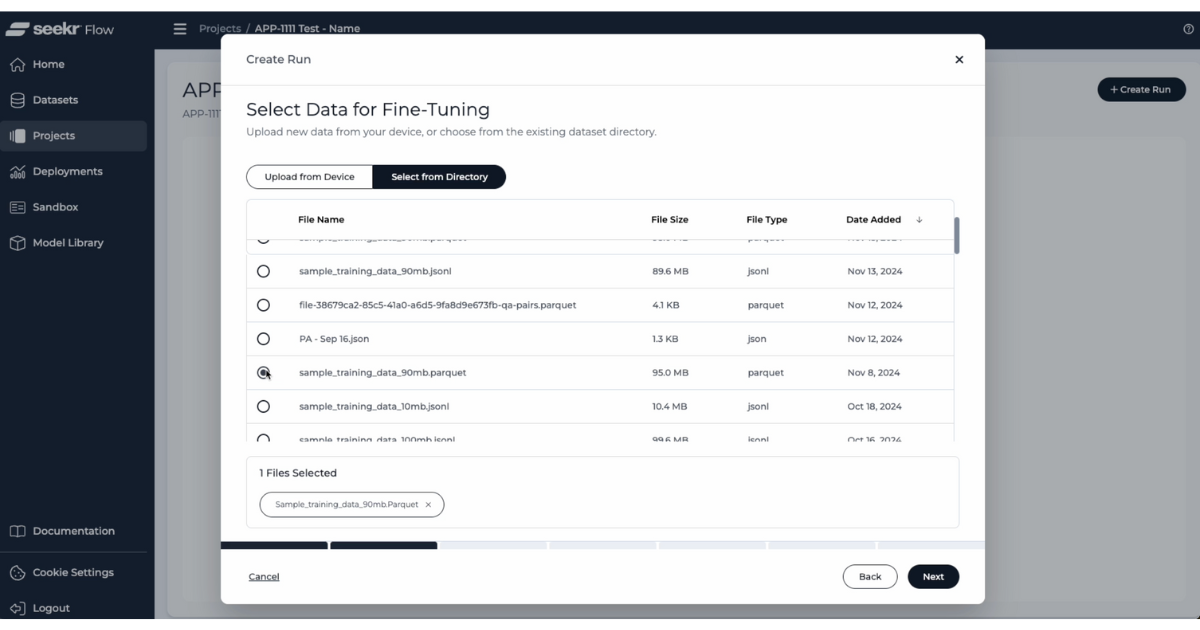

Dataset Directory Update

Uploaded file improvements in the Create Run Modal

- Successfully uploaded files immediately appear in the dataset directory.

- Switching to the directory view auto-selects the newly uploaded file.

- Radio buttons now remain active, ensuring smooth file selection.

UI/UX Enhancements

We have made several updates to improve the user experience and provide clearer guidance across the platform

- Sandbox: Updated language to better support the new model parameter settings for improved clarity.

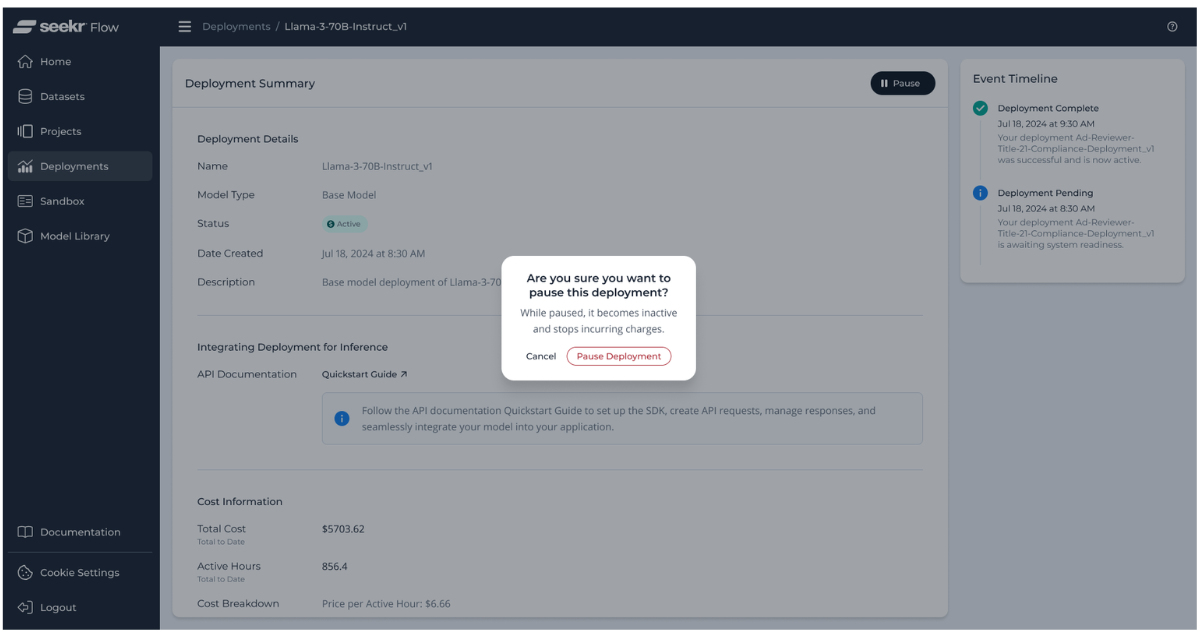

- Deployment Dashboard: Enhanced explanations of cost transparency features for better understanding of resource usage.

- Projects: Cancellation dialogs now show detailed cost information related to token usage, offering users more visibility into their resource consumption.

These updates aim to make SeekrFlow’s interface more intuitive and user-friendly, enhancing navigation and overall clarity.